Affective Digital Twin Concept

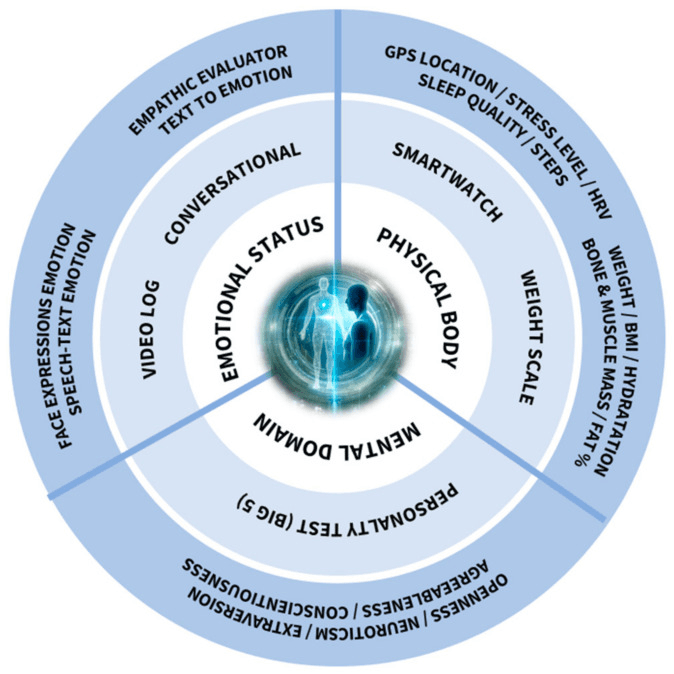

Affective Digital Twin (ADT) refers to a digital replica of an individual that encapsulates not only their physical state but also their cognitive and emotional characteristics. It builds upon affective computing (which enables machines to recognize and simulate human emotions) and digital twin technology (virtual models continuously updated to reflect a real entity) (From Digital Twin to Cognitive Companion | Psychology Today) (AI Tunes into Emotions: The Rise of Affective Computing – Neuroscience News). Existing digital human avatars often “lack realistic affective traits, making emotional interaction with humans difficult” ([2308.10207] Affective Digital Twins for Digital Human: Bridging the Gap in Human-Machine Affective Interaction). ADTs address this gap by integrating data across the physical, mental, and emotional domains of a person into one enriched model ( Human Digital Twin in Industry 5.0: A Holistic Approach to Worker Safety and Well-Being through Advanced AI and Emotional Analytics – PMC ). For example, a human digital twin can combine physiological signals (heart rate, sleep, etc.), psychological profiles, and emotional expressions to maintain an accurate real-time reflection of its human counterpart ( Human Digital Twin in Industry 5.0: A Holistic Approach to Worker Safety and Well-Being through Advanced AI and Emotional Analytics – PMC ). A recent framework for ADTs highlights key components: affective perception (sensing user emotions via facial expressions, voice, physiology, etc.), affective modeling (representing the user’s affective state in the twin), affective encoding (storing emotional data in the digital model), and affective expression (the twin exhibiting or communicating emotion) ([2308.10207] Affective Digital Twins for Digital Human: Bridging the Gap in Human-Machine Affective Interaction). Grounded in advancements in AI, human-computer interaction and affective science, ADTs thus serve as a “functional mirror” of who we are – capturing our behaviors, reactions, and feelings in digital form (From Digital Twin to Cognitive Companion | Psychology Today).

Figure: High-level architecture of a Human Digital Twin (HDT) model integrating physical, mental, and emotional domains ( Human Digital Twin in Industry 5.0: A Holistic Approach to Worker Safety and Well-Being through Advanced AI and Emotional Analytics – PMC ). In this example, sensors like smartwatches and smart scales feed the Physical Body domain (e.g. location, heart rate variability, sleep quality, weight and fitness metrics). Psychological assessments (Big-5 personality traits) inform the Mental Domain, while AI-driven emotion recognition from face, voice, and text populate the Emotional Status. Together, these components form a comprehensive digital profile.

Recent developments in affective computing have greatly enhanced the fidelity of such twins. Modern deep learning models can detect complex emotional cues with high accuracy, enabling rich affective data for the twin. For instance, one study built a “digital twin model” for real-time emotion recognition from EEG brainwave signals, achieving near-perfect classification of emotional states ( Envisioning the Future of Personalized Medicine: Role and Realities of Digital Twins – PMC ). Multi-modal emotion recognition – analyzing facial expressions, voice tone, text sentiment, physiological signals, etc. – provides a holistic view of a person’s affective state. By fusing these inputs, ADTs can represent both transient emotions (like momentary stress or joy) and more stable traits (like personality and mood patterns). Crucially, ADTs are not static; they update continuously as new data streams in, ensuring the digital self remains an up-to-date, context-rich reflection of the individual ( Human Digital Twin in Industry 5.0: A Holistic Approach to Worker Safety and Well-Being through Advanced AI and Emotional Analytics – PMC ). In summary, an Affective Digital Twin is conceived as the most accurate and enriched digital representation of an individual, unifying their physical condition, cognitive state, and emotional experience into a single living model.

Federated Learning and Privacy-Preserving Technologies

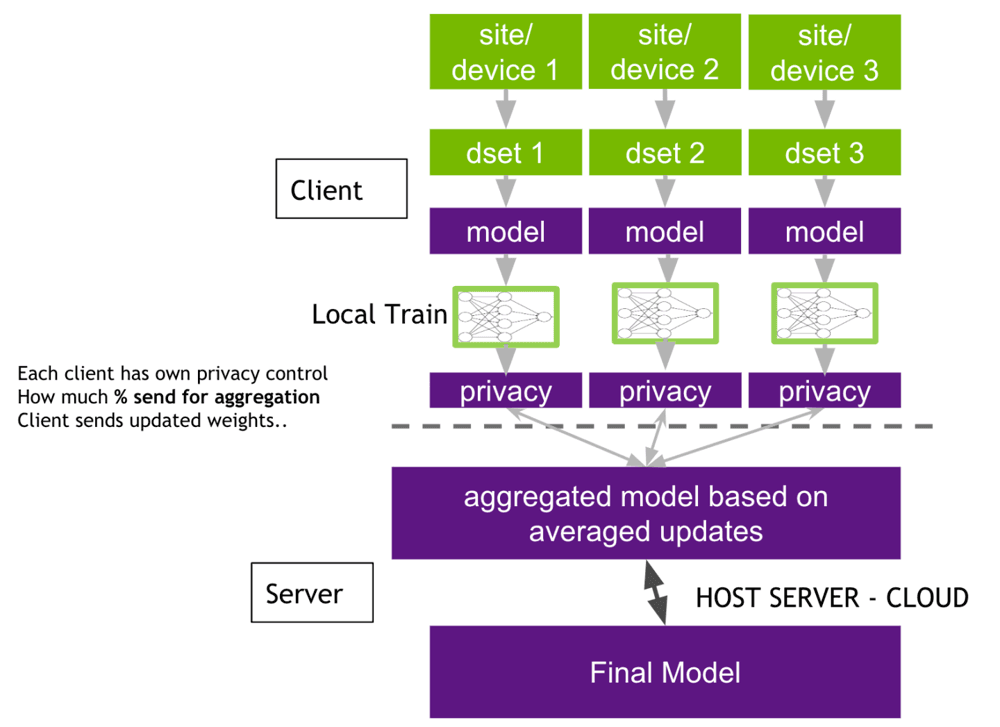

Building an ADT involves personal, sensitive data – raising challenges for privacy and user agency. Federated learning (FL) and related privacy-preserving technologies are key to ensuring data security while improving AI models across decentralized devices. In federated learning, model training is performed collaboratively on the users’ own devices, without centralizing raw personal data (Federated Learning Meets Homomorphic Encryption – IBM Research) (Federated learning background and architecture – NVIDIA Docs). Each device (smartphone, wearable, etc.) computes updates to the AI model using its local data (e.g. the user’s sensor readings or interactions), and only these model updates – not the raw data – are sent to a server for aggregation into a global model (Federated learning background and architecture – NVIDIA Docs). This process allows the combined model to improve from the experience of many users while keeping individuals’ data siloed on their devices. In essence, FL “enables collaborative training without sharing any data,” yielding strong collective AI models “while still protecting data privacy.” (Federated learning background and architecture – NVIDIA Docs)

To further safeguard personal data in an ADT, state-of-the-art privacy-enhancing technologies are applied, often in combination with FL. Key approaches include:

- Differential Privacy (DP): This technique injects carefully calibrated noise into data or model updates to mask the contribution of any single individual () (). DP provides mathematical guarantees that an algorithm’s output does not reveal sensitive details about any one person. When used in federated learning, each user’s model update can be noised such that adversaries cannot reconstruct original data or determine if a particular user’s data was included (). In other words, “DP has emerged as a robust solution for safeguarding privacy in AI systems,” blocking inference attacks like model inversion or membership detection ().

- Homomorphic Encryption (HE): HE allows computations to be performed on encrypted data. In the FL context, users encrypt their model updates before sending them to the server. The server can then aggregate these updates without ever decrypting them (Federated Learning Meets Homomorphic Encryption – IBM Research). “Fully homomorphic encryption enables computation on encrypted inputs and produces results in encrypted form,” so the central aggregator never sees raw model parameters (Federated Learning Meets Homomorphic Encryption – IBM Research). Only the final aggregated model (potentially sent back to users) is decrypted with a key, ensuring that individual contributions remain unintelligible to any eavesdropper or even the server itself (Federated Learning Meets Homomorphic Encryption – IBM Research). This greatly reduces the risk in scenarios where the server might be untrusted or compromised.

- Secure Multi-Party Computation (SMC): SMC is a set of cryptographic protocols that allow multiple parties to jointly compute a function over their inputs while keeping those inputs private. In an ADT system, SMC can enable, for example, multiple user devices or stakeholders to compute aggregate statistics (or train a model) on their combined data without any party learning the others’ data. Essentially, the computation is distributed in such a way that each party learns only the final result (or their assigned piece of it) and nothing else. SMC techniques (such as secret sharing or garbled circuits) add another layer of protection, ensuring that even if one device is breached, it cannot reveal other devices’ data during the collaborative computation.

- Client-Side Heavy Architecture: A “privacy by design” principle is to perform as much processing as possible on the client (user’s device) side, minimizing what is sent to any server. This client-side heavy approach includes on-device data storage and analytics, and sending out only high-level insights or anonymized results. For example, Apple’s system for privacy-preserving analytics employs local differential privacy, where “data is randomized on the user’s device before being sent, so the server never receives raw data.” (Learning with Privacy at Scale – Apple Machine Learning Research) In practice, this means an ADT could analyze your emotional data (heart rate spikes, facial expressions, journal entries, etc.) locally on your phone or wearable. Only aggregated trends or model parameters – often already anonymized or encrypted – leave the device. By keeping personal data local, risks of centralized data breaches or misuse are dramatically reduced. A client-side architecture also reinforces individual agency: the user maintains direct possession of the raw data, and can control or audit what their device shares.

Figure: Illustration of federated learning in a client–server architecture. Multiple client devices (e.g. site/device 1, 2, 3) each train a local model on their own data (local datasets 1, 2, 3). Privacy measures (differential privacy noise, encryption, etc.) can be applied to the model parameters before sharing. The server only receives the clients’ model updates (not raw data) and aggregates them (e.g. by averaging) into a global model (Federated learning background and architecture – NVIDIA Docs) (Federated learning background and architecture – NVIDIA Docs). This global model is then sent back to clients, improving their personal AI models without ever exposing individual data.

Overall, through techniques like FL, DP, HE, and SMC, ADT systems strive to “ensure the privacy and empowerment of users” (Policy ‹ Affective Computing — MIT Media Lab). The goal is that the user’s sensitive affective data (emotions, biometrics, thoughts) remain under their control and protected, even as the collective AI model continuously learns. By preserving individual agency in this way, these technologies mitigate the risks of centralized data processing – enabling powerful, personalized affective AI while upholding data security and privacy.

Applications and Longitudinal Evolution

Affective Digital Twins open up a range of promising applications that leverage self-awareness and personalization. Key use cases include:

- Emotional Self-Awareness and Personal Growth: ADTs can serve as “mirrors” for individuals to better understand their own emotions and behaviors over time. By tracking mood fluctuations, stress triggers, and emotional responses, a digital twin promotes greater self-awareness and reflection. For example, researchers have designed conversational agent companions that prompt users to label and verbalize their feelings, helping them practice emotional self-awareness (Designing Conversational Agents for Emotional Self-Awareness — MIT Media Lab). Participants found that such an AI agent made it comfortable to self-disclose and helped them reflect on their emotions (Designing Conversational Agents for Emotional Self-Awareness — MIT Media Lab). This kind of personal ADT could similarly act as a virtual coach, nudging users to recognize patterns in their emotional life (e.g. noticing that social media use increases anxiety, or that exercise improves mood) and offering personalized strategies to improve well-being. Over the long term, engaging with an ADT in this way may bolster one’s emotional intelligence, resilience, and self-regulation habits.

- Mental Health Monitoring and Support: In healthcare, affective digital twins are poised to revolutionize mental health tracking and personalized interventions. Affective computing already shows promise in “aiding various processes aimed at enhancing mental well-being.” (Theme Issue: Affective Computing for Mental Well-Being) By continuously monitoring emotional and physiological indicators, an ADT can act as an early warning system for mental health issues. For instance, it could detect prolonged patterns of sadness, social withdrawal in communication, or physiological signs of chronic stress – flagging the risk of depression or anxiety relapse to the user or clinicians. Studies indicate that “accurately identifying patient emotions” via affective computing gives doctors more information to tailor treatments (Affective Computing for Healthcare: Recent Trends, Applications, Challenges, and Beyond). An affective twin could enable longitudinal mental health tracking: comparing a person’s current state to their historical baseline to discern subtle changes. If a concerning trend is detected, the system might proactively suggest coping resources (like breathing exercises when stress is high) or alert a medical professional if warranted (with the user’s consent). Such applications emphasize that the ADT is not just a short-term snapshot but a long-term companion that grows with the individual, continuously learning and adapting to better support their mental health.

- Individualized Learning and Education: ADTs have exciting potential in personalized education and training. By modeling a learner’s cognitive and affective state, a digital twin can enable truly individualized learning experiences. For example, an intelligent tutoring system with affective sensing might adjust its teaching strategy if it detects frustration or boredom in the student. Recent research on affect-aware learning systems shows that incorporating learners’ emotional signals can “significantly improve learning effectiveness, lower anxiety, and increase engagement.” ( The Influence of Affective Feedback Adaptive Learning System on Learning Engagement and Self-Directed Learning – PMC ) In one study, a platform that provided adaptive feedback based on the student’s emotional state (e.g. offering encouragement or adjusting difficulty when the student was anxious) led to higher self-directed learning and better attitudes toward learning ( The Influence of Affective Feedback Adaptive Learning System on Learning Engagement and Self-Directed Learning – PMC ). An ADT for a student could maintain a profile of which topics or learning activities tend to excite or frustrate that individual, their preferred learning modalities, and even track their confidence or stress in real time. Using this, educational content and pace can be tailored dynamically: e.g. the system might interrupt with a short fun quiz if it senses the learner’s attention waning, or provide extra hints when it detects confusion. Beyond academics, this concept extends to professional training and skills development – imagine a digital twin that knows your fear of public speaking and can simulate practice sessions with affective feedback to help you improve. By treating each learner as “a unique case study”, ADTs could enhance motivation, efficiency, and the overall learning experience.

A distinctive strength of ADTs is their ability to support longitudinal evolution – both of the individual and the AI model – through continuous monitoring. Unlike a one-off assessment, the digital twin persists over time, accumulating knowledge about the person’s emotional patterns, development, and life events. This longitudinal perspective unlocks insights into long-term affective trends. For the individual, it means the ADT can highlight, for example, how far their emotional health has come over a year of therapy, or how their stress levels cycle seasonally. By making these trends visible, the twin empowers the user to practice better self-regulation – they can see triggers and improvements, reinforcing positive behavior changes. For the AI, longitudinal data allows it to become more personalized and predictive: the longer an ADT observes a person, the better it can anticipate their needs or moods. Researchers note that human emotional behavior is dynamic and evolving, and affective models must adapt to remain accurate over time () (). Indeed, an ADT can be continually fine-tuned with new data (via lifelong learning algorithms), ensuring that as a person grows or their circumstances change, the digital twin’s cognitive and affective models also update. Some even envision future ADTs that act as “cognitive companions” – not only reflecting the user’s current self but actively aiding in their growth (From Digital Twin to Cognitive Companion | Psychology Today). Such a companion-like ADT could engage in dialogues, offer coaching, and evolve alongside the individual, ultimately enhancing long-term affective development and life outcomes.

Ethical and Security Considerations

The deployment of affective digital twins raises important ethical and security considerations. By their nature, ADTs involve deeply personal data – emotions, behaviors, perhaps intimate thoughts – and thus the stakes for misuse or harm are high. Key considerations include preventing manipulation, ensuring fairness, and balancing power dynamics, as well as adhering to emerging regulations and ethical guidelines:

- Preventing Manipulative Abuse: If in the wrong hands, a detailed affective profile of a person could be used to manipulate or exploit them. For example, an AI that knows when a user is sad or anxious could selectively advertise products or propaganda at vulnerable moments. Indeed, scholars have warned that emotion-sensing AI “could empower authoritarian regimes to monitor the inner lives of citizens”, or enable companies to unfairly influence consumers (). To guard against this, strong policies must limit how ADTs and emotion data can be used. Transparency is critical – users should know what data is being collected and for what purpose. Informed consent and the ability to opt out or turn off certain sensing features give users agency to prevent unwanted surveillance. System designs can also include manipulation-resistance, for instance by disallowing micro-targeted content based on emotional state or by keeping the most sensitive inferences (like a diagnosis of depression) purely on-device unless the user chooses to share it with a clinician. Calm technology principles (avoiding intrusive or deceptive interactions) and privacy-by-design can ensure the ADT works for the user’s benefit, not against it (Policy ‹ Affective Computing — MIT Media Lab). Ultimately, aligning the ADT’s objectives with the user’s well-being – and establishing oversight for any external use of affective data – helps prevent scenarios where the technology could be turned into a tool of manipulation.

- Ensuring Fairness and Avoiding Bias: Affective AI systems must be fair and inclusive, meaning they should work equitably across different populations and not reinforce harmful biases. This is a known concern in emotion recognition technology: many facial-expression models, for instance, have shown accuracy disparities across ethnic groups or cultures, since emotional expressions can vary widely () (). If an ADT misreads the emotions of a particular demographic (say, misclassifying people of a certain race as angry more often), it could lead to unequal outcomes or stigma. To ensure fairness, developers need to train ADT models on diverse, representative data and involve experts in affective science to interpret cultural nuances of emotion. Continuous bias audits should be conducted – checking that the ADT’s assessments are consistent and accurate for users of different ages, genders, cultural backgrounds, etc. Moreover, user-specific calibration can help: the ADT should learn an individual’s personal expression style rather than apply one-size-fits-all labels. From an ethical standpoint, fairness also means the ADT’s benefits should be accessible to those who need them (e.g. mental health support via ADTs should not be limited to affluent users only). By actively mitigating bias and ensuring reliability of emotion sensing for all groups, we build trust that the ADT will treat its user justly and won’t inadvertently discriminate or misinform.

- Balancing Power and Agency: The introduction of ADTs creates a new dynamic between individuals, AI service providers, and possibly other parties (healthcare providers, employers, etc.) who might offer or use these digital twins. It’s vital to balance power so that the individual retains ultimate control over their digital likeness and data. Without safeguards, there’s a risk of power asymmetry – for instance, a company operating a centralized ADT platform could unilaterally change how the twin behaves or monetize the user’s emotional data. To counter this, strong data rights should be in place. Users should own or at least have clear rights to their ADT data, with abilities to access it, correct it, or delete it (the “right to be forgotten”). They should be able to decide who else (if anyone) can access their digital twin’s information – for example, opting to share certain health-related insights with a doctor, but not with an insurer or employer. Technical measures like the client-side architecture discussed earlier inherently empower users by keeping data local and identities separate from affective data (Policy ‹ Affective Computing — MIT Media Lab). Additionally, explaining the ADT’s decisions or interpretations (explainable AI) can help users understand and contest how the system is influencing them, rather than feeling mystified or dominated by the AI’s judgments. The principle of human autonomy must be respected at all times: the ADT should augment human decision-making, not replace it or pressure the user. In design, this might mean the ADT suggests coping strategies for stress but leaves the choice to the user, or it might mean an ADT in education advises a student but doesn’t override the student’s own learning goals. Maintaining this human-in-the-loop approach keeps power in the user’s hands and fosters a healthy partnership between person and digital twin (Policy ‹ Affective Computing — MIT Media Lab).

- Security Measures: From a security perspective, ADT systems must be hardened against breaches and malicious attacks. Personal affective data is extremely sensitive – a hack leaking years of someone’s emotional diary or biometric signals would be a dire privacy violation. Thus, encryption (both in transit and at rest), robust access control, and secure authentication are baseline requirements (Policy ‹ Affective Computing — MIT Media Lab). Techniques like federated learning, differential privacy, and secure MPC (discussed earlier) further mitigate the impact of any single point of failure. It’s also important to consider insider threats and abuse: those who design or operate ADT services should not have unchecked access to user data. Solutions include strict role-based data access (with most data being processed by algorithms without human viewing), audit logs for any data access, and possibly decentralized or client-run infrastructure to remove the need to trust a central authority. Regular security audits and compliance with cybersecurity standards will be crucial as ADTs become more common. In sum, a multi-layered security posture is needed to ensure that users can trust the integrity and confidentiality of their digital twin.

- Regulatory and Ethical Frameworks: Given these concerns, regulatory bodies and professional organizations are actively developing guidelines specific to affective and personal AI systems. For instance, the Partnership on AI released a report on the ethics of AI with emotional intelligence, highlighting issues such as the scientific validity of emotion inferences and calling for restrictions on high-stakes use cases like hiring (). In fact, public backlash to practices like automated emotion screening of job candidates has already led to some legislation and bans (). Lawmakers are starting to catch up: “some U.S. cities and states have begun to regulate AI related to affect and emotions,” and data protection laws are classifying emotion data as sensitive. The California Consumer Privacy Act (CCPA), for example, gives users the right to know about and delete data that companies collect about their affective state (treating facial images, voice, and other affect-related data as protected biometric information) (). In Europe, the proposed AI Act considers many emotion recognition systems as high-risk, implying stringent requirements or even prohibitions in certain contexts (like law enforcement or employment). Meanwhile, industry standards are emerging: the IEEE’s Ethically Aligned Design includes chapters on affective computing, and researchers have outlined ethical frameworks to guide “affectively-aware AI” development with principles of fairness, transparency, and accountability (). It’s widely agreed that ethics in this domain “should not just prescribe what we shouldn’t do, but also illuminate what we should do – and how.” () In practice, adhering to such guidelines means that any ADT deployment should undergo ethical review, obtain informed consent from users, and include governance mechanisms for oversight. By following robust ethical frameworks and complying with evolving regulations, developers can ensure ADTs are used responsibly – maximizing benefits like self-awareness and support, while minimizing risks of harm, manipulation, or inequity.

Future Directions

Affective Digital Twins lie at the intersection of multiple disciplines, and future advancements will likely emerge from bridging technical innovations with deeper psychological insight. Here we highlight emerging research gaps and potential directions, as well as the crucial role of interdisciplinary collaboration:

- Enhancing Emotional Realism and Depth: One research gap is achieving a more nuanced understanding of human emotions within ADTs. Many current models still rely on basic emotion categories (happy, sad, angry, etc.) or visible signals like facial expressions. However, human affect is rich and context-dependent – emotions blend, vary in intensity, and are expressed differently across cultures and personalities. Future ADTs will need to incorporate advanced affective models that go beyond surface cues. This could involve integrating psychological theories of emotion (such as appraisal theory or affective dimensions like arousal and valence) so that the digital twin can interpret why a user feels a certain way, not just how they appear on the outside. It also means expanding the sensory inputs: for example, using natural language processing to gauge sentiment in the user’s written or spoken content, or using wearable EEG and physiological sensors to detect internal stress signals that might not show on the face. Large-scale, longitudinal emotion datasets – while respecting privacy – will be valuable for training these richer models. An important line of work is addressing the scientific debate about emotion recognition; studies have questioned the universality of facial emotion expressions (), urging development of context-aware algorithms that learn a person’s unique affective “language.” In short, making ADTs emotionally intelligent in an authentic way remains an open challenge, calling for innovation in both sensing and modeling of affect.

- Multimodal Fusion and Context Awareness: As hinted, ADTs will increasingly draw on multimodal data – combining vision, audio, text, and biometric signals. A key technical challenge is multimodal fusion: how to intelligently merge these streams to form a coherent picture of the user’s state. Recent trends show a focus on creating multimodal affect datasets and refining fusion techniques (AI Tunes into Emotions: The Rise of Affective Computing – Neuroscience News). Future research may leverage deep learning architectures that can learn correlations between, say, tone of voice and heart rate changes to better infer anxiety, or between facial micro-expressions and the semantic content of what the user is saying. Moreover, context is king: the same biometric pattern might mean very different things in different situations (a racing heart could mean excitement during exercise or panic during a meeting). ADTs will need to incorporate contextual information – perhaps via situational awareness (calendar events, location, time of day) – to interpret emotions accurately. This could involve hybrid models that blend data-driven learning with symbolic knowledge about the user’s environment and history. In coming years, we might see ADTs that understand, for example, that “Monday mornings” or “before exams” are contexts where the user’s stress indicators have a different baseline. By making context a first-class input, ADTs can evolve from reactive emotion trackers to proactive, situation-aware assistants.

- Privacy-Enhancing Innovation: As ADTs become more prevalent, new privacy-preserving techniques will continue to develop in tandem. Federated learning and differential privacy will be refined to better fit the affective domain – for instance, ensuring that sharing patterns or anomalies in emotional data doesn’t inadvertently reveal identities. Researchers are exploring approaches like encrypted user modeling, where even the personalization of the model happens in a privacy-preserving manner. One frontier is the use of trusted execution environments or decentralized blockchain-based architectures to give users cryptographic assurance of their data’s security. We can expect future ADTs to possibly run on personal secure hardware (like secure enclaves on a phone) that can be verified by the user. Additionally, transparency tools (like privacy nutrition labels for AI) might be developed so users can inspect and understand how their digital twin handles data. In essence, keeping trust will be an arms race with threats – but it’s spurring a lot of creative research, which will remain a core aspect of ADT innovation.

- Interdisciplinary Collaboration: By nature, building a truly effective Affective Digital Twin requires expertise from many domains. We anticipate even tighter collaboration between technologists and experts in human behavior. Psychologists and neuroscientists will be integral to decipher the complexities of affect and to validate that ADT models correspond to real human experiences (ensuring we’re not modeling “phantom” emotions that don’t exist). Collaboration with cognitive scientists can help integrate aspects like memory, attention, and decision-making into the twin – aligning with how the human mind works. Ethicists and legal scholars, meanwhile, will continue to guide responsible design and compliance with social norms. Importantly, the users themselves (through participatory design or user studies) should be co-creators of ADT technology – reflecting their values and needs. This interdisciplinary approach is already evident: affective computing has always “integrated multiple disciplines, including computer science, engineering, psychology, and neuroscience” (AI Tunes into Emotions: The Rise of Affective Computing – Neuroscience News), and this will only deepen with ADTs. Educational researchers will join to apply ADTs in learning, clinicians to apply them in therapy, and so on. Such collaborations will shape the next generation of ADTs to be holistic and human-centric, rather than just engineering-driven.

- Emerging Use Cases and Research Areas: Looking ahead, new application domains will also drive ADT research. The rise of the metaverse and virtual reality is one – realistic digital avatars in social VR or gaming will need ADT technology to convey emotions believably, leading to advances in real-time affective computing ([2308.10207] Affective Digital Twins for Digital Human: Bridging the Gap in Human-Machine Affective Interaction). Another area is affective robotics, where a robot equipped with an ADT of its user could better cooperate and attend to that person’s needs (for instance, a robot assistant that knows when you’ve had a bad day and adjusts its interaction style accordingly). Group or Team Digital Twins might emerge, modeling the emotional dynamics of teams or families to improve collaboration and empathy among members – an area blending social psychology with multi-user data. On the technical side, integrating ADTs with large language models (like GPT-style AI) opens possibilities: the language model could serve as a “brain” for the twin’s cognitive and conversational abilities, while the affective data grounds it in the user’s real feelings. This could yield highly sophisticated personal AI assistants that not only complete tasks but also provide emotional support and mentorship, essentially becoming the “cognitive companion” that some have envisioned (From Digital Twin to Cognitive Companion | Psychology Today) (From Digital Twin to Cognitive Companion | Psychology Today). Of course, this raises new questions around identity (where does the AI end and the human begin in such a partnership?) and will be fertile ground for future research.

In conclusion, Affective Digital Twins represent a cutting-edge convergence of affective science and digital technology, aimed at creating the most faithful digital representations of ourselves. The field has made rapid strides, but many exciting developments lie ahead. By prioritizing privacy, ethics, and interdisciplinary knowledge as central pillars, researchers are working to ensure these digital companions enhance our lives in meaningful, positive ways. As we continue to close the gap between biological and digital emotions, ADTs could very well become trusted partners – helping us understand ourselves better, improving our mental health and learning, and seamlessly integrating into the fabric of daily life. The journey is just beginning, and its success will depend on collaboration across science, engineering, and humanities to realize the full potential of affective digital twins in a responsible manner.

References

Apple Differential Privacy Team. (2017). Learning with privacy at scale. Apple Machine Learning Journal, 1(8).

Amara, K., Kerdjidj, O., & Ramzan, N. (2023). Emotion Recognition for Affective Human Digital Twin by Means of Virtual Reality Enabling Technologies. IEEE Access, 11, 74216-74227. ( Human Digital Twin in Industry 5.0: A Holistic Approach to Worker Safety and Well-Being through Advanced AI and Emotional Analytics – PMC )

Barrett, L. F., Adolphs, R., Marsella, S., Martinez, A. M., & Pollak, S. D. (2019). Emotional expressions reconsidered: Challenges to inferring emotion from human facial movements. Psychological Science in the Public Interest, 20(1), 1-68. ()

Kairouz, P., et al. (2021). Advances and open problems in federated learning. Foundations and Trends in Machine Learning, 14(1–2), 1–210. (Discussion of federated learning paradigm) (Federated Learning Meets Homomorphic Encryption – IBM Research) (Federated learning background and architecture – NVIDIA Docs)

Lu, F., & Liu, B. (2023). Affective Digital Twins for Digital Human: Bridging the Gap in Human-Machine Affective Interaction. Journal of the Chinese Association for Artificial Intelligence, 13(3), 11-16. ([2308.10207] Affective Digital Twins for Digital Human: Bridging the Gap in Human-Machine Affective Interaction) ([2308.10207] Affective Digital Twins for Digital Human: Bridging the Gap in Human-Machine Affective Interaction)

Picard, R. W. (1997). Affective Computing. MIT Press. (Foundational book defining affective computing)

Shen, J., Lecamwasam, K., Park, H. W., Breazeal, C., & Picard, R. W. (2023). Designing conversational agents for emotional self-awareness. In 2023 ACII Workshops (pp. 1-4). IEEE. (Designing Conversational Agents for Emotional Self-Awareness — MIT Media Lab) (Designing Conversational Agents for Emotional Self-Awareness — MIT Media Lab)

Subramanian, B., Kim, J., Maray, M., & Paul, A. (2022). Digital twin model: a real-time emotion recognition system for personalized healthcare. IEEE Access, 10, 81155–81165. ( Envisioning the Future of Personalized Medicine: Role and Realities of Digital Twins – PMC )

Partnership on AI. (2021). The Ethics of AI and Emotional Intelligence. (Report examining ethical issues in emotion AI) () ()

IEEE Global Initiative on Ethically Aligned Design (2019). Ethically Aligned Design, First Edition, Chapter 8: Affective Computing. IEEE Standards Association. ()

Liu, Y., et al. (2024). Affective Computing for Healthcare: Recent Trends, Applications, Challenges, and Beyond. arXiv preprint arXiv:2402.13589. (Affective Computing for Healthcare: Recent Trends, Applications, Challenges, and Beyond)

Huang, Y. M., et al. (2022). The influence of affective feedback adaptive learning system on learning engagement and self-directed learning. Frontiers in Psychology, 13, 855246. ( The Influence of Affective Feedback Adaptive Learning System on Learning Engagement and Self-Directed Learning – PMC )

Gretsch, G. (2024). AI tunes into emotions: The rise of affective computing. Neuroscience News. (Summary of recent advances in affective computing) (AI Tunes into Emotions: The Rise of Affective Computing – Neuroscience News) (AI Tunes into Emotions: The Rise of Affective Computing – Neuroscience News)

MIT Media Lab Affective Computing Group – Policy Statement (n.d.). Policy and ethics in affective computing. (Outlines principles of privacy, consent, and mutuality in affective computing) (Policy ‹ Affective Computing — MIT Media Lab) (Policy ‹ Affective Computing — MIT Media Lab)

McMahan, B., et al. (2017). Communication-efficient learning of deep networks from decentralized data. In Proc. of AISTATS. (Introduces federated learning algorithm)

Dwork, C., & Roth, A. (2014). The algorithmic foundations of differential privacy. Foundations and Trends in Theoretical Computer Science, 9(3–4), 211–407.

Ayoob, A., et al. (2022). Ethical considerations on affective computing: An overview. IEEE Access, 10, 16775-16787. () ()

California Consumer Privacy Act (2018). California Civil Code §1798.140. (Legal text defining biometric information to include emotion-related data) ()

Feng, Z., et al. (2024). Human digital twin in Industry 5.0: A holistic approach to worker safety and well-being through advanced AI and emotional analytics. Sensors, 24(2), 655. ( Human Digital Twin in Industry 5.0: A Holistic Approach to Worker Safety and Well-Being through Advanced AI and Emotional Analytics – PMC ) ( Human Digital Twin in Industry 5.0: A Holistic Approach to Worker Safety and Well-Being through Advanced AI and Emotional Analytics – PMC )

Ong, D. C., et al. (2021). An ethical framework for guiding the development of affectively aware AI. In ACII 2021. () ()